In the previous post I’ve been announcing GPU-Surf first release. Now I’m glad to show you a live video demo of GPU-Surf and another demo using Bundler (structure from motion tools):

There are three demos in this video:

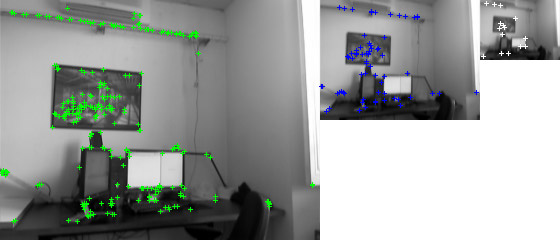

- GPU-Surf live demo.

- PlyReader displaying Notre-Dame dataset.

- PlyReader displaying my own dataset (Place de la Bourse, Bordeaux).

GPU-Surf

You’ll get more information on the dedicated demo section.

In this video GPU-Surf was running slowly because of Ogre::Canvas but it should be running really faster.

PlyReader displaying Notre-Dame dataset

I’m also interested in structure from motion algorithm, that’s why I have tested Bundler, which comes with a good dataset of Notre-Dame de Paris.

I have created a very simple PlyReader using Ogre3D, the first version was using billboard to display point cloud but it was slow (30fps with 130k points). Now I’m using custom vertex buffer and it runs at 800fps with 130k points.

The reconstruction was done by the team who created Bundler from 715 pictures of Notre-Dame de Paris (thanks to Flickr). In fact, in this demo they have done the big part of the job, I have just grab their output to check if my PlyReader was capable of reading such a big file.

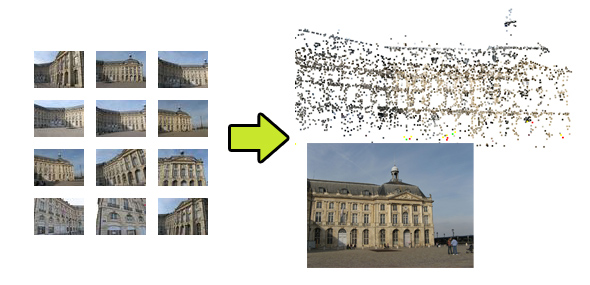

PlyReader displaying my own dataset

If you already used Bundler you know that structure from motion algorithm needs a very slow pre-processing step to get “matches” between pictures of the dataset. Bundler is packaged to use Lowe’s Sift binary, but it’s very slow because it’s taking pgm as picture input and the output is written in a text file. Then a matching step is executed using KeyMatchFull.exe which is optimized using libANN but still very slow.

I have replaced the feature extraction and matching steps by my own tool: BundlerMatcher. It is using SiftGPU, which gives a very nice speed-up. As my current implementation of GPU-Surf isn’t complete I can’t use it instead of SiftGPU but this is my intention.

|

|

| 23 pictures taken with a classic camera (Canon Powershot A700) |

Point cloud generated using Bundler |

I have created this dataset with my camera and matched the pictures using my own tool: BundlerMatcher. This tool creates the same .key file as Lowe Sift tool and creates a matches.txt file that is used by Bundler. I have tried to get rid off this temporary .key file and keep everything in memory but changing Bundler code to handle this structure was harder than I predicted… I’m now more interested by insight3d implementation (presentation, source) which seems to be easier to hack with.